Recently I've been doing a lot of work leveraging the Tableau Data Server. This is a great new feature of Tableau Server that allows BI groups to publish standard sets of data to Tableau for analysts to consume. This is a great alternative to traditional approaches which take 100x as long plus don't allow for rapid prototyping of the data asset.

Although this feature is great it hasn't always worked the best. The most often problem I run into is creating the extract itself when dealing with “large” data volumes of 20M+ rows. I developed a work-around to leverage the power of the server to create the extract and save your poor laptop from having to crunch all that data for you. Here goes…if you end up trying this please let me know how it works for you or if you have any alternatives. I'd like to use this to feedback to Tableau on how to improve this feature of their product.

1. Create a view in your source database that pulls a small amount of the total rows you want in your extract. In SQL Server I do this using the ‘top' function. In MySQL you'll need to use the Limit() function. Before moving to the next step test that querying this view works in a reasonable time frame from your local query environment (eg. SSMS, Toad, SQLYog, etc.) and there are no obvious data issues (nulles, dupes, etc.)

2. Create your extract in Tableau importing all data from the view. Make sure to create whatever hierarchies you want, rename columns so they make sense, correct any data types Tableau messed up, and whatever calculations you know of at this point. Again, before moving on, test that this works relatively well with this subset of data.

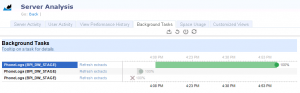

3. Publish your data extract to Tableau Server. With the extract now on the server, go and manually run a full refresh. Watch the progress in the Background Tasks Maintenance View. Once it finishes successfully test that you can connect to it and all looks good.

4. Now, go change your view to return all rows. Remove whatever condition you put in to trim down the rows returned. This should allow the extract to pull in the entire data set next time it is refreshed on the Tableau Server.

5. Go back to Tableau Server and execute another full refresh of your extract. This time, the extract should get the entire population of data and the beauty is all the horsepower of your Tableau Server will be used to create the extract instead of your poor laptop.

6. Make sure the extract finishes on the server (this may take a while) and then test again that you can connect via the Tableau Data Server. Lastly, revel in your accomplishment 🙂

Hope this helps! Please let me know how it works for you if you end up trying it out…

Here are the results of my most recent use of this technique. I started w/ a view only showing 10 rows then changed it behind the scenes to pull in all 35M rows. Works like a charm!

Cheers,

Ben

Here’s another technique that doesn’t rely on having DDL permissions on the database: tableaulove.tumblr.com/post/18945358848/how-to-publish-an-unpopulated-tableau-extract

Hi Ben, nice workaround.

Is this your preferred method (querying OLTP Data Sources) for analyzing Big data?

If you write about millions of rows, wouldn’t OLAP be a good and fast way to analyze this? Are there any reasons for not using OLAP instead of OLTP?

I’m very interested in the question whether using OLAP (SSAS) or OLTP (MS SQL Server) as the preferred Data Source for Tableau.

This looks great, Russell! I’ll give this a go and see how it works in my case. Thanks for sharing!

Hi David,

I think OLAP is a good option if your end-users want their interface to be Excel. Outside of that I generally try not to use Tableau pointed at cubes due to some added complexity. I’m not saying Tableau doesn’t work with cubes rather, if you’re using Tableau I would just rather not use them since it can handle almost everything a cube can w/ a Tableau Data Extract and avoid having to write MDX.

I’ve found Tableau’s engine for data extracts incredibly powerful even with larger sets of data. The next thing I am really excited to explore is how to interface with true ‘Big Data’ in Hadoop w/ Hive, Vertica or Greenplum. I hope this helps!

Cheers,

Ben

Hi Ben!

The workaround you described is actually what I’ve been doing to deal with large extracts, but I’m running into another issue: after 7,200 seconds the Tableau server interrupts the refreshing of the extract and I’m getting an error: “Timeout error. Cancelling task that took longer than 7200 seconds”.

I searched but have not found a way to change the timeout value yet. Has anyone also experienced this issue?

The 7200 seconds timeout is because of the “backgrounder.querylimit” parameter. You can set this higher with tabadmin.

Pawel,

I hope all is well, and sorry for the delay in responding. Here is the command you need to issue on your Tableau Server in order to fix this.

tabadmin set backgrounder.querylimit XXX

XXX = whatever time limit you want in seconds.

Ref: onlinehelp.tableausoftware.com/v7.0/server/en-us/tabadmin.htm

Cheers,

Ben

Hello Ben

The solution you propose is exactly what I was looking for. But, unfortunately, I was not able to reproduce your recipe. Below you find what I tried – and my questions. I think answering them would be useful for other people too.

Many thanks for any help in advance

Dimitrios

“1. Create a view.”

a) In Tableau Desktop I created a new, empty Tableau Workbook (twb).

b) I connected to a PostgreSQL data base named “MyDB”, and entered the following custom query: SELECT “Timestamp”, “VariableId”, “Type”, “Subtype”, “Value” FROM “MyBigTable” LIMIT 1000. The name I gave to the data connection was “MyDB_connection”.

“2. Create your extract in Tableau.”

c) In Tableau Desktop I executed the menu command “Data/MyDB/Extract Data…”

d) I pressed the button “Extract” and saved the extract in a file name “MyDB_connection.tde”. (Right-clicking “MyDB_connection” now showed a check mark before “Use Extract”).

“3. Publish your data extract to Tableau Server.”

e) In Tableau Desktop: “Connect to Data/Tableau Data Extract” in Tableau Desktop and selected the file “MyDB_connection.tde”. I named the new connection “MyDB_connection Extract”.

f) In Tableau Desktop: “Data/MyDB_connection Extract/Publish to Server…”, giving the extract on the server the same name, “MyDB_connection Extract”.

g) In Tableau Desktop: “Data/MyDB_connection Extract/Refresh” (but nothing seemed to happen, really).

“4. Now, go change your view to return all rows.”

h) In Tableau Desktop: “Data/MyDB_connection/Edit Connection…”; modified the custom query by removing the “LIMIT 1000” statement.

i) I answered to the dialog “A data extract is active etc.” with “OK”

“5. Go back to Tableau Server and execute another full refresh of your extract.”

>> How do I accomplish this? On the Server I apparently can only activate schedules (running a refresh every 15 min etc.)

“6. Make sure the extract finishes on the server”

>> How do I accomplish this? (But I did not get that far anyway).

Sorry for the delay in responding. I think the first step you are using custom SQL when what I’m suggesting is creating a view in your database w/ the row limit and connecting to it directly in tableau as if it were a single table.

Once you publish your workbook to server, you should then be able to browse to it, check the box next to the workbook and click the option for ‘scheduled tasks’ and then add full refresh > run now. (image attached)

Hope this helps!

Hi Ben,

Wanted to seek your expertise on Tableau data extract

1) If I want to publish twb & tde separately to the tableau server, I suppose this approach is workable. The issue lies in the fact that I have packaged my workbook (in twbx) that contains a data extract. Can i unpackage this file and use the TDE instead to extract data and publish it to the tableau server?

2) Assuming I have 1 TDE and have multiple data sources, I can connect all these data sources to this right? If I were to do a one time refresh of the TDE, do all the workbooks connecting to this get updated as well?

Let me know!

Thanks.

Hi Joshua, if I understand your question correctly here is what I would do:

1 – Open my workbook w/ the extract (assuming it’s pulling from a view you’ve created w/ a small row limit)

2 – Publish just the data source to Tableau Server

3 – Change your view to no longer have a row limit

4 – Refresh the data source on the server via schedule or using tabcmd refreshextracts (onlinehelp.tableausoftware.com/v8.0/server/en-us/tabcmd_cmd.htm#id4cdb3410-1c41-4dad-b1d2-306542ac9b32)

5 – Once that is complete, connect all your other workbooks/data sources to this version of the extract that lives on Tableau Server.

This should give you the desired outcome you’re looking for. When the refresh gets update all the other workbooks will be updated automatically and you’ll only have to manage the 1 copy of the data extract on the server.

Hi Ben, thanks for the info. Yes, this is what I am looking for.

Not sure if you have seen this “error message” before when you publish data source to the tableau server.

“The data source you are publishing contains 6 calculations that reference a different data source in this workbook. The calculations will not be valid outside of this workbook, and cannot be used on the server.”

The background of this is that I have two views and multiple reports connecting to them. It seems that Tableau is telling me that there are calculated fields that I have created that uses both data sources and that, when I publish them separately to the server, there may be some reports that cannot be displayed properly.

Would you able to shed some light on this?

Cheers,

Joshua

Hmmm, you might need to first publish them to server, then create the calculations. My guess is when you build a calc off an extract, then publish that extract independent of the workbook, the calcs won’t know where the other data source is. Although, it’s hard to say w/o seeing the workbook itself.

Hi, i am working for a client that doesnt provide me any create access to the db. I cant create a view. is there any alternative?

See Russel Christopher’s comment above

Hi Ben,

I need to extract 12 million rows of data

i have tried the following to create the large extracts. I have done the below steps

creating a sample view of 10 rows and then creating an extract of the data source and published to server

once published i did a manual refresh,tht is successfully ran.

Again i went back to the data source and removed the limit from the sql query . When i am pressing Go to Workbook , its taking too much time and it is creating an extract and taking too much time.

Please let me know where i went wrong

How but this method? databoss.starschema.net/generate-initial-extract-tableau-server/

No database access, no additional calculation on workbook level

Would this solution work for BigQuery’s size limit?

This method helped me out tremendously. Before I was extracting an entire 19+ million record view onto my local computer, then pushing that to Server. The entire process was taking me over 30 minutes.

With this process, creating an extract of 1000 records, took no time, then Server was able to reconstruct the 19+ million record extract in 2.5 minutes.

Thank you!

hi

I want t extract data from salesforce but it is showing an error. How can this be resolved?

Awesome!

I don’t think so, to get around that you’d have to do some magic inside BQ itself

Hi There, you need to put the row limit inside the view on the database itself. Once you edit the data source it will attempt to create the extract locally.

Ben,

I have a few hundred million rows of data (only 6 columns) I would like to extract. Does using this method on the server expand the capacity for the amount of data I can extract? Or will the limitations be the same?